By Denise Callan, Momentum

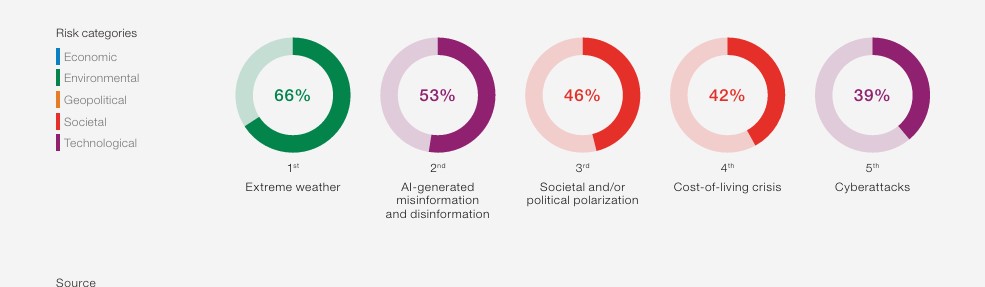

According to the 2024 World Economic Forum’s Global Risks Report, manipulated and falsified information is now the world’s second most severe short-term risk, only Extreme Weather ranks above it.

That report states, “Over the next two years, misinformation and disinformation will present one of the biggest ever challenges to the democratic process. This risk is further amplified by the increasing use of generative AI to produce “synthetic content”. This content has been created by artificial manipulation or modification of data, and it aims to deceive or alter the original purpose of the data or media. Deepfakes are an increasingly common example of this activity.

How is the European Union responding?

The EU’s response goes back to 2020 with the introduction of the Digital Services Act (DSA) to fulfil the growing need to monitor and moderate online content and activity; the European Union. The Act sought to prevent illegal and harmful activities online and the spread of disinformation. Under the Act binding EU-wide obligations apply to all digital services that connect consumers to goods, services, or content, including new procedures for faster removal of illegal content as well as comprehensive protection for users’ fundamental rights online. The framework rebalances the rights and responsibilities of users, intermediary platforms, and public authorities and is based on European values – including the respect of human rights, freedom, democracy, equality and the rule of law.

What is the impact of the lack of third-party fact-checking?

Third-party fact-checking has been used to counter the spread of misinformation across social media platforms. When Mark Zuckerberg, CEO of Meta, announced it was ending its third-party fact-checking in the US, it raised concerns. The company indicated it would follow the example of X, which previously dispensed with third-party fact-checkers, in favour of using Community Notes, a crowd-sourcing system where users submit notes on posts including flagging them for being false or misleading. Meta said it had decided to end this process because expert fact-checkers had their own biases, resulting in too much content being fact-checked.

How does the use of Community Notes differ from third-party fact-checking?

The decision by X and now Meta, to rely on Community Notes elevates the risk of manipulated and falsified information. Third-party fact-checkers must meet strict non-partisanship standards through verification by the International Fact-Checking Network. The IFCN recognises that “unsourced or biased fact-checking can increase distrust in the media and experts while polluting public understanding”.

Community Notes rely heavily on user-generated content, so the quality and accuracy can vary considerably, they are also subject to manipulation and bias. For example, coordinated groups can upvote or downvote content to push a particular agenda, leading to the spread of misinformation. Due to the lack of accountability, it is difficult to address mistakes or biases, and the system can also favour popularity over truth. The overall credibility of a platform can be undermined by reliance on Community Notes, as users can lose trust in published content.

When X stopped using third-party fact-checkers and moved to Community Notes several issues arose including an increase in misinformation, and coordinated activity to push specific agendas which led to biased and inaccurate information being highlighted. Within a short time, many users began to lose trust in the platform, with many reputable accounts being deleted by their owners, or becoming dormant and no longer active on the platform. This in turn has led X to become a less reliable and more chaotic source of information. Furthermore, the platform is now recognised as actively promoting misinformation and challenging democracy.

What action has the European Commission taken to address this social media platform activity?

In 2023 the European Commission initiated formal proceedings to investigate X’s compliance with the DSA. In July 2024, the commission issued preliminary findings indicating that X was in breach of the DSA. If these findings are confirmed, X could face fines of up to 6% of its total worldwide annual turnover and be required to take corrective measures. According to European Commission Vice President Vera Jourova, “X is the platform with the largest ratio of mis- or disinformation posts.”

What next?

For now, Meta’s changes only apply to the United States. However, we must be prepared for the possibility that they will be rolled out in Europe. We also await the ultimate findings of the Commission’s proceedings against X. These recent developments highlight the importance of digital media literacy and the need for individuals to develop the skills to interrogate and evaluate the information they receive online so they can make informed choices.

The increase in misinformation in recent years and the likely impact of reduced fact-checking highlights the critical need for the ARENAS project and the importance of the work being done by the project and its consortium members. For our part, we have decided to stop activity on X, as the platform and the content it promotes no longer align with the purpose or aims of ARENAS. After discussions with consortium members, it was decided to create an ARENAS profile on Bluesky Social and continue our activity on LinkedIn. For now, the project continues to maintain an active presence on Meta with its Instagram account, but we will be monitoring this should the changes to third-party fact-checking be introduced in Europe.